About Me

I am a fifth-year Ph.D. student at Peking University, advised by Prof. Zongqing Lu. I received my Bachelor's degree in 2021, from the Turing Class at Peking University.

My research interest lies in Reinforcement Learning (RL) and Embodied AI. Currently, I am focusing on:

- Scalable learning methods for dexterous hands and humanoid robots manipulation.

- Efficient RL for open-world, embodied agents.

I am open to collaborations and discussions.

Selected Papers

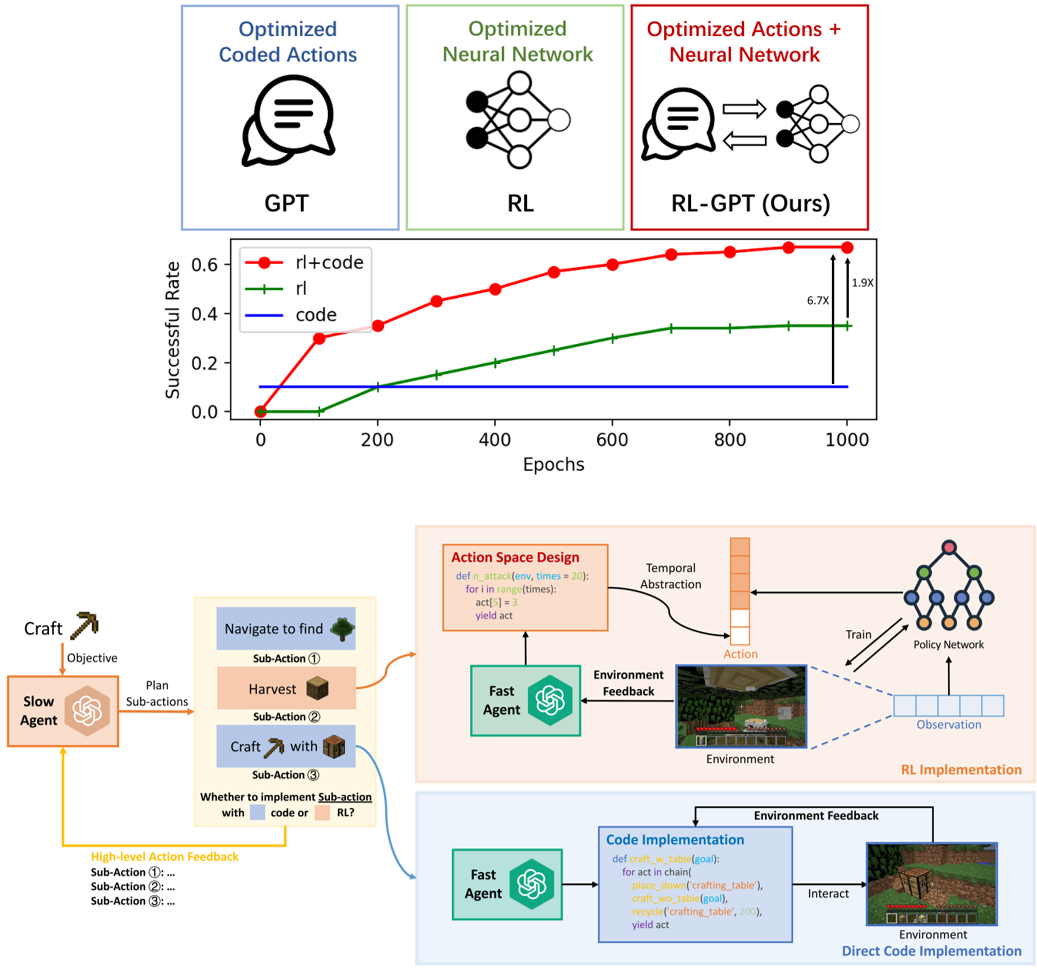

RL-GPT: Integrating Reinforcement Learning and Code-as-Policy

An LLM agent equipped with RL for continual learning in open-world environments.

Experience

Research Intern (2025 - Present).

We are a start-up team on embodied AI and foundation models.

I am leading research on dexterous manipulation.

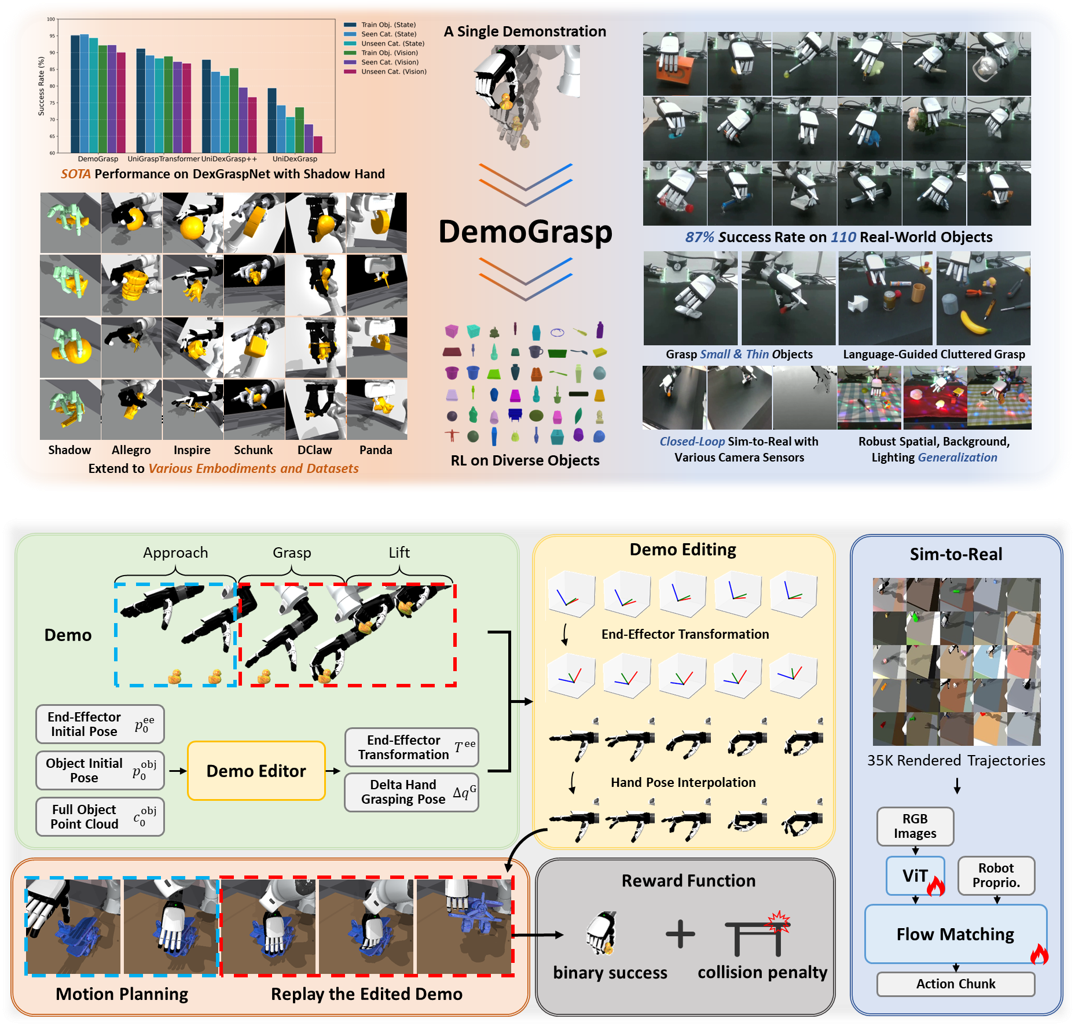

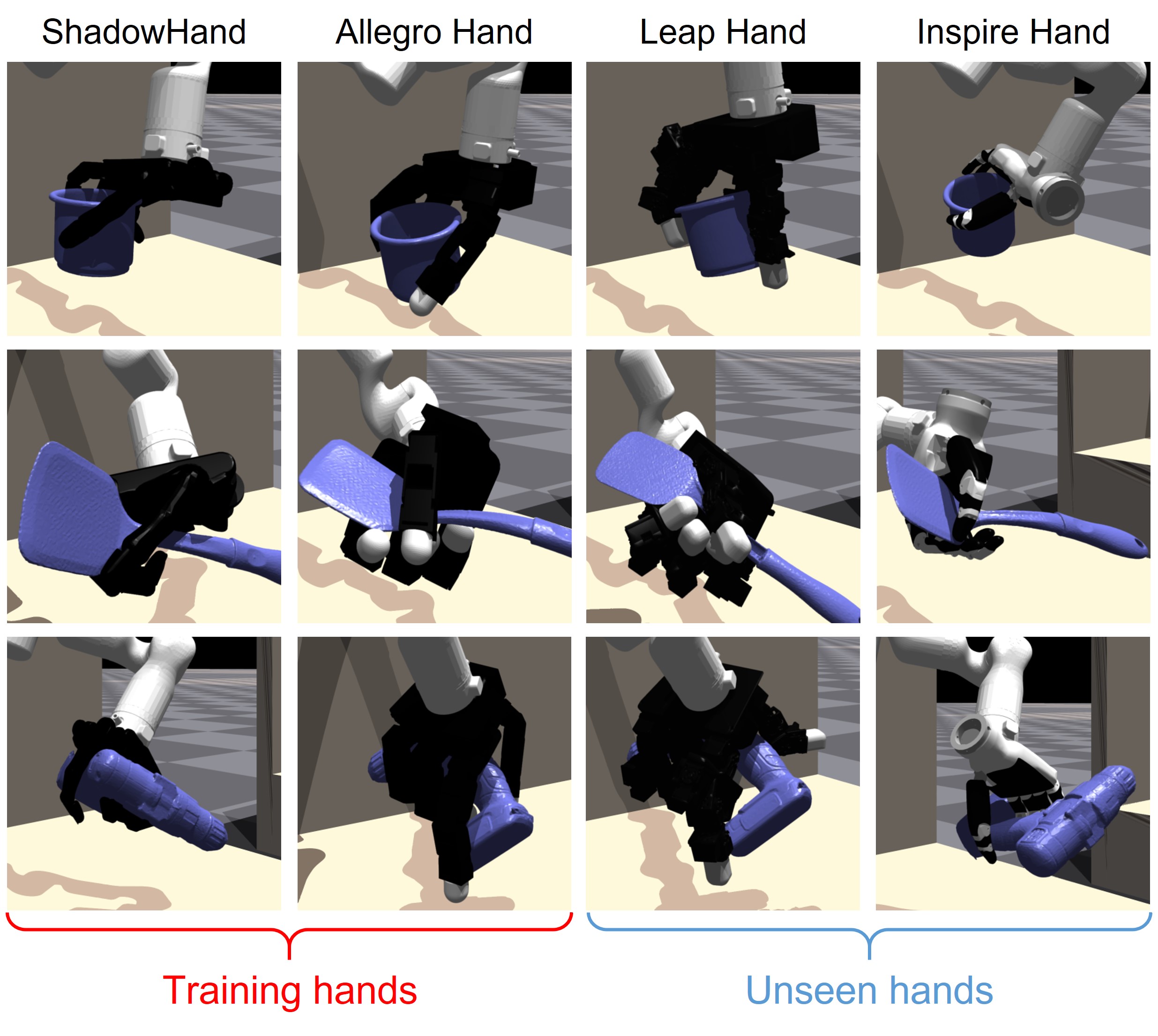

Representative works: Being-0, Being-H0, DemoGrasp.

Research Intern (2023-2025).

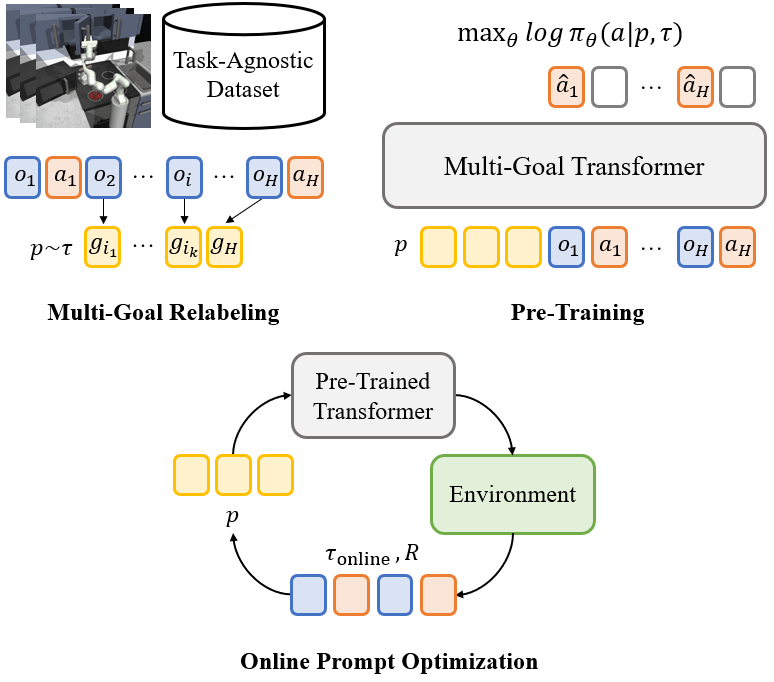

I focus on RL research, including RL for open-world agents and RL for generalizable dexterous manipulation.

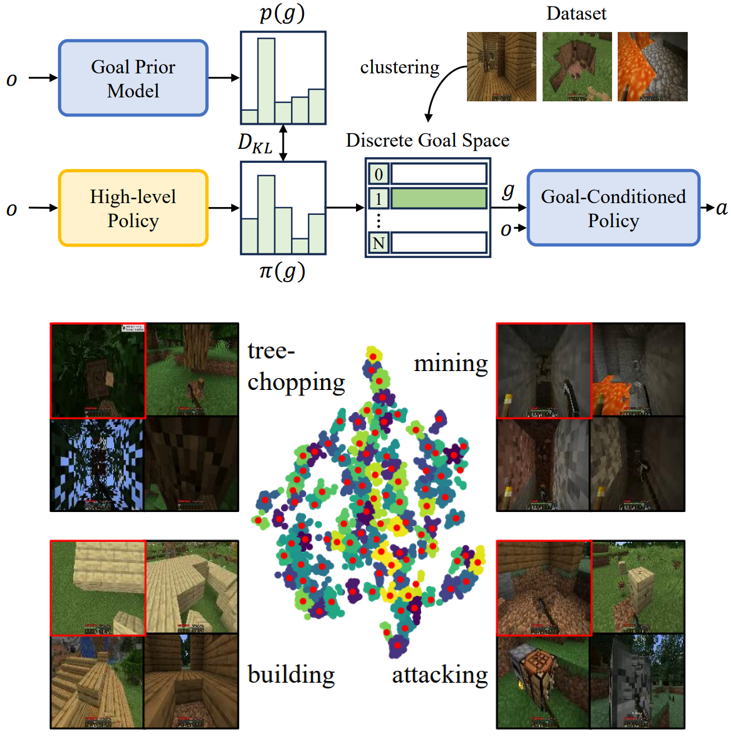

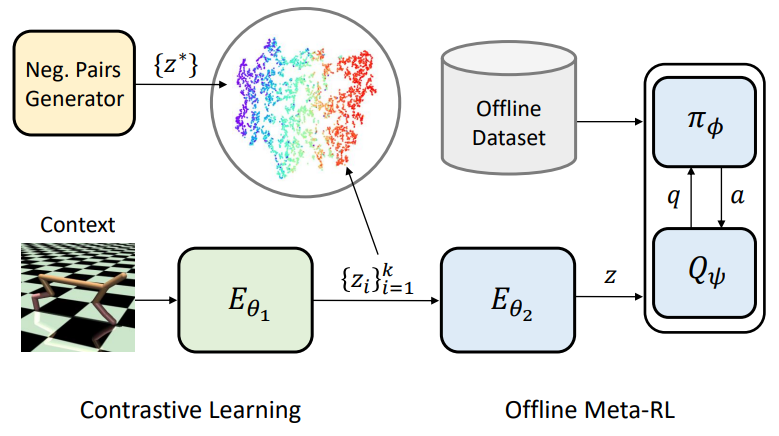

Representative works: PTGM, MGPO, Plan4MC, RL-GPT, CrossDex, ResDex, BiDexHD.

Research Intern (2019 - 2021). Advised by Prof. Hao Dong.

I study generative models and learning physical interactions.

Representative work: DMotion.

Selected Awards

- Peking University President Scholarship | 北京大学校长奖学金 (2022, 2021)

- National Scholarship | 国家奖学金 (2018)

- Peking University Merit Student | 北京大学三好学生 (2025, 2018)

- Second Class Award in Chinese Physics Olympiad (Finals) | 全国中学生物理竞赛决赛二等奖 (2016)

- See CV for full list.

Services

Conference Reviewer

ICML'22,24,25; NeurIPS'22,23,24,25; ICLR'24,25,26; AAAI'23,24,25,26; CVPR'24,26; CORL'25; IROS'25.

Teaching Assistant

Deep Reinforcement Learning, Zongqing Lu, 2023 Spring

Computational Thinking in Social Science, Xiaoming Li, 2020 Autumn

Deep Generative Models, Hao Dong, 2020 Spring